Using Schema & Knowledge Graph Signals for AI Visibility

To properly optimize for AI, it is essential to first understand how AI processes information. …

SEO -

30/01/2026 -

19 dk okuma

Stay up to date with Peakers

The digital marketing ecosystem has undergone a fundamental structural shift. For more than twenty years, the primary mechanism of online discovery was the search engine results page (SERP), a list of ten blue links that directed users to external websites. We have now transitioned into the era of the “Answer Engine.”

The integration of Generative AI into Google Search, specifically through AI Overviews and the dedicated AI Mode, has rewritten the implicit contract between the search engine and the publisher. We are no longer merely optimizing for a ranking position; we are optimizing for a citation. This guide serves as the definitive resource for Google Search Console: Best Guide For AI Search & SEO, designed to help you navigate this volatile environment.

The stakes could not be higher. As of late 2025 and moving into 2026, the data indicates a massive disruption in traditional traffic patterns. The days of predictable click-through rates (CTR) based solely on ranking position are over. Marketing managers and SEO strategists are facing a dual crisis: a collapse in organic traffic for informational queries and a “black box” reporting environment within Google Search Console (GSC) that obscures the true source of impressions.

The metrics that once served as reliable indicators of health, impressions and average position, have become decoupled from actual site visits. A rising impression count in GSC, once a cause for celebration, may now simply indicate that your content was used to train an AI model that answered the user’s question without ever sending them to your site.

This report is not a theoretical discussion of artificial intelligence. It is a tactical manual for survival and growth. We will examine the specific mechanics of how Google’s Gemini-powered search functions, dissect the data explaining the dramatic drop in organic CTR, and provide a step-by-step methodology for using Google Search Console to reclaim visibility. We will explore “Generative Engine Optimization” (GEO), a new discipline that requires a complete rethinking of content structure, and we will detail the technical requirements for establishing the Entity Authority necessary to be cited by Large Language Models (LLMs). The goal is to move beyond the passive tracking of decline and toward an active strategy of citation capture.

To effectively utilize Google Search Console insights in this new environment, it is necessary first to understand the technological shift that has occurred. Traditional search engines operated on an index-and-retrieve model. The engine crawled the web, indexed pages based on keywords and links, and retrieved the most relevant documents when a user entered a query. The user then performed the work of synthesis, clicking on multiple links to gather information.

The new model, driven by Retrieval-Augmented Generation (RAG), fundamentally changes this workflow. When a user inputs a complex query, the AI does not just retrieve documents; it reads them, synthesizes the information, and generates a novel response. This response, the AI Overview, sits at the top of the SERP, often occupying the entire visible area on mobile devices.

In the past, an impression in Google Search Console meant a user saw a link to your site. If they found it relevant, they clicked. Today, an impression in GSC often means your content was “seen” by the user only as a component of an AI-generated answer. The user may have read a summary derived from your content without ever seeing your brand name or URL, or they may have seen a small citation link that they felt no need to click because the summary satisfied their intent.

This creates a dangerous divergence in GSC data. You may see impression volumes remain stable or even increase as Google tests AI Overviews on more queries. However, clicks will flatline or decline. Without understanding this mechanic, a marketing manager might erroneously conclude that their title tags are ineffective or that their meta descriptions need optimization. The reality is that the user’s need was met on the SERP itself. The “Zero-Click” phenomenon, which has been growing for years, has been accelerated by AI to encompass not just simple calculations or weather forecasts, but complex, multi-step informational queries that were previously the bread and butter of content marketing.

It is also critical to distinguish between the two primary AI interfaces, as they affect GSC data differently. First, there are AI Overviews. These are the passive, auto-generated summaries that appear at the top of standard search results. They are triggered automatically by Google when the algorithm determines that a generative response is more helpful than a list of links. This usually happens for “how-to” questions, comparisons, and complex informational topics. Second, there is AI Mode. This is a specific, conversational interface—often accessed via a dedicated tab or a distinct entry point on mobile—where users engage in a back-and-forth dialogue with the AI. This mode is designed for exploration, reasoning, and deep research.

The confusion in reporting arises because Google has chosen to merge data from both these experiences into the standard “Web” search performance report in GSC. This decision has stripped SEO professionals of the ability to easily segment “traditional” search traffic from “AI” traffic, creating the analytical blind spot that this report aims to illuminate.

Understanding the magnitude of the shift is essential for setting realistic expectations and strategy. We must look at the hard data to understand what is happening to click-through rates and why the old benchmarks for success are no longer valid.

The most definitive data on this subject comes from Seer Interactive’s extensive study conducted in September 2025. This research provides a stark quantification of the damage AI Overviews have done to traditional organic traffic.

For search queries where an AI Overview is present, the organic click-through rate has plummeted to a staggering 0.61%. To put this in perspective, historically, a result ranking in the top position could expect a CTR of anywhere from 20% to 35%. A drop to below 1% essentially means that for these specific queries, organic search as a traffic driver has ceased to exist in its traditional form. The user is consuming the AI answer and leaving.

The impact is not limited to organic listings. The same study revealed that paid search CTR also crashed for these queries, dropping from roughly 19.7% to 6.34%. This represents a 68% decline in ad engagement. This suggests a fundamental change in user behavior: when an AI answer is provided, users are ignoring both the organic links and the paid advertisements. They are finding satisfaction in the summary.

Take Advantage of Automation with Artificial Intelligence!

How can you use your time more efficiently? Artificial intelligence saves you time by automating repetitive tasks. Learn how you can leverage AI to accelerate your business processes.

However, the data also reveals a critical opportunity—a lifeline for brands willing to adapt. While the general organic results are suffering, the links that are explicitly cited within the AI Overview perform significantly better.

Brands that manage to secure a citation within the AI response see a 35% increase in organic clicks compared to those that do not. This is the new “Position 1.” The goal of SEO is no longer just to rank at the top of the blue links; it is to be the source that the AI quotes.

Furthermore, the impact on paid search when a brand is cited in the AI Overview is even more dramatic. Paid clicks for cited brands are 91% higher than for non-cited brands. This indicates a powerful synergy: when the AI validates a brand as an authority by citing it, users are much more likely to click on that brand’s ads, viewing them as credible solutions rather than intrusive marketing.

This bifurcation of performance—where general results die but cited results thrive—dictates our new strategy. We must stop optimizing for the list and start optimizing for the answer box.

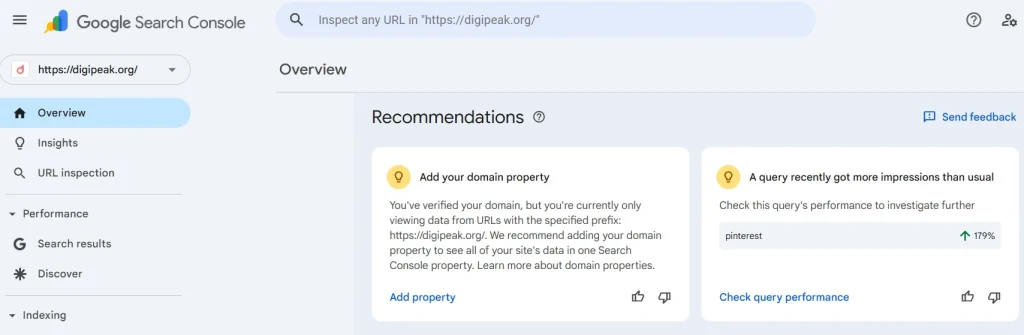

The primary operational challenge for SEO professionals in 2025/2026 is that Google Search Console does not make it easy to see this data. Google has made a deliberate choice to integrate AI visibility metrics into the general “Web” search bucket, rather than creating a separate “AI” filter or report.

Is Your E-Commerce Website Raking in Traffic but No Leads/Sales?

Get a Free Conversion Analysis Audit now!

Let's evaluate together how you can capture the attention of your target audience more effectively. The goal is to increase conversion rates ! Fill out the form now and get your free analysis report!

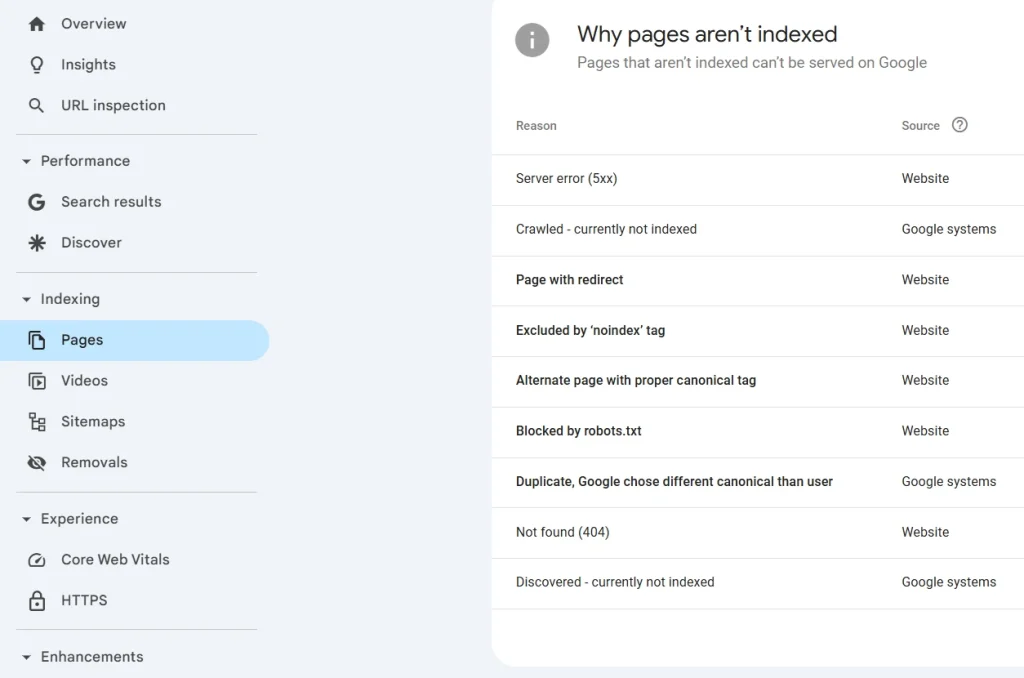

As of the June 17, 2025 update, all impressions and clicks generated from AI Mode and AI Overviews are counted toward the “Web” totals in the Performance report. This means that if you look at your main performance graph, you are seeing a mix of:

This aggregation creates a misleading picture. You might see a keyword like “best CRM software” generating 10,000 impressions and assume you are ranking well. However, if 9,000 of those impressions are from an AI Overview where you were not cited (or were cited but not clicked), your CTR will appear artificially low. You might try to “fix” this by rewriting your title tag, not realizing that your title tag was never seen by the user because the AI Overview pushed it down the page.

GSC provides no dimension to filter by “AI Presence.” You cannot segment your data to see “Queries with AI Overviews” versus “Queries without.” You also cannot see “AI Citations” as a metric. This lack of transparency forces us to rely on inference and external validation. We must use the tools we have—specifically regular expressions (Regex) and external rank tracking—to reconstruct the data that Google is hiding.

We are operating in a blind spot, but it is not a black hole. By understanding the types of queries that trigger AI (questions, comparisons, long-tail searches), we can isolate the segments of our traffic that are most likely being affected. We will detail exactly how to do this in the strategic recommendations section.

If the problem is the transition from Search to Answer, the solution is the transition from SEO to GEO: Generative Engine Optimization. GEO is the art and science of structuring content so that it is easily understood, verified, and cited by Large Language Models.

Traditional SEO was about proving relevance to an algorithm using keywords and backlinks. GEO is about proving information gain and authority to a model using structure and entities.

AI models are trained to extract facts. They are efficiency engines. They do not want to wade through 500 words of “fluff” introduction to find the answer. To capture the citation, you must adopt the “Inverted Pyramid” style of journalism, but adapted for AI.

Every piece of informational content should begin with a Direct Answer Block. This is a 50-to-70-word summary that directly answers the target query. If the keyword is “how to calculate ROI,” the very first paragraph should be a definition of ROI followed by the formula. It should not be a paragraph about why ROI is important.

This “Direct Answer” serves two purposes. First, it is the ideal format for the AI to grab and display as the core of its summary. Second, it improves user experience for the human reader who wants a quick answer.

Analysis of content that successfully earns citations in AI Overviews reveals a consistent pattern of traits. We can summarize this as the CSQAF Framework:

Citations: High-performing content does not exist in a vacuum. It cites other authoritative sources. AI models use outbound links to trusted domains (like government sites, academic institutions, or major industry publications) as a proxy for research depth. You must link out to prove you have done your homework.

Statistics: AI models love data. Vague claims are ignored. Specific data points are cited. Do not say “traffic dropped significantly.” Say “traffic dropped by 61%.” Providing original data, or curating specific statistics, increases the “fact density” of your content, making it more attractive for extraction.

Quotations: Including direct quotes from subject matter experts (SMEs) signals authority. The AI looks for consensus and expert validation. If you are writing about medical trends, quote a doctor. If you are writing about SEO, quote a known practitioner.

Authoritativeness: This refers to the “Entity” status of the author. We will discuss this in depth in the technical section, but content must be clearly attributed to a verified expert, not a generic “staff” account.

Fluency: The content must be grammatically perfect and logically structured. AI models are language processors; they prioritize content that follows clear linguistic patterns. Use simple sentence structures and active voice.

Beyond the words themselves, the HTML structure of your page communicates priority to the AI.

Question-Based Headings: Your H2 and H3 tags should mirror the natural language questions users are asking. Instead of a heading that says “Tracking Methodology,” use a heading that says “How do I track AI traffic in GSC?”. This explicit mapping helps the AI understand exactly which part of your text answers which user intent.

Structured Lists and Tables: Whenever possible, format information as a list or a comparison. If you are comparing two products, do not write paragraphs of text. Use a structure that separates the features clearly. While we are not using tables in this report, your content should utilize them heavily. AI models are incredibly efficient at scraping tabular data to generate their own comparison matrices.

The Freshness Imperative: LLMs have a bias toward recency. They are terrified of providing outdated information, which is a common source of “hallucinations.” Therefore, content freshness is a massive ranking factor for AI citations. You must adopt a strategy of Relentless Content Updates.

Any article that contains statistics, dates, or time-sensitive advice should be refreshed quarterly. Simply changing the publish date is not enough; you must update the data points. Adding “2026” to your title tag and updating the year in your intro can be enough to signal relevance, but updating the actual underlying facts is what secures the citation.

In the era of AI, Google is moving away from the “Web Graph” (links between pages) and toward the “Knowledge Graph” (relationships between entities). An Entity is a distinct, well-defined concept—a person, a place, a corporation, or a creative work—that Google understands as a “thing,” not just a string of characters.

To be cited as a source of truth, your brand and your authors must be established as trusted Entities in Google’s Knowledge Graph.

It is no longer acceptable to publish content under a generic “Admin” or “Marketing Team” byline. Every piece of content must be attributed to a specific human expert. This expert needs a digital footprint that Google can verify.

Author Bio Pages: You must have robust author bio pages on your site. These pages should list the author’s credentials, education, job title, and years of experience. Crucially, they should link out to the author’s profiles on other trusted platforms like LinkedIn, Crunchbase, or MuckRack.

SameAs Schema: You must use Person schema markup on these bio pages. Within this schema, use the sameAs property to link to those external profiles. This tells Google: “The John Smith on this blog is the same as the John Smith on this LinkedIn profile who is a professor at this university.” This process, known as Reconciliation, allows Google to merge the signals of authority from across the web into a single Entity profile for that author.

Your brand itself must also be a recognized Entity. The easiest way to check this is to search for your brand name in Google. If a Knowledge Panel appears on the right side of the desktop results, you are a recognized Entity. If not, you have work to do.

Organization Schema: Your homepage must contain detailed Organization schema. This should include your logo, your official name, your “SameAs” links to social profiles, and your contact information. This serves as the digital passport for your brand.

Third-Party Validation: Google validates entities by cross-referencing data. You need consistent mentions of your brand across the web. This includes business directories, press releases, and profiles on authoritative sites like Wikipedia or Wikidata. Wikidata is particularly powerful as a source for Google’s Knowledge Graph. A verified entry there is a strong signal of legitimacy.

Consistent NAP Data: Ensure your Name, Address, and Phone number (NAP) are identical across every platform. Even small discrepancies can cause Google to fragment your entity into duplicate profiles, diluting your authority.

Since we cannot rely on native GSC reports to tell us the truth about AI traffic, we must use advanced filtering techniques to extract the signal from the noise. We will use Regular Expressions (Regex) to isolate the queries most likely to be affected by AI.

AI Overviews and AI Mode are triggered disproportionately by specific types of queries: complex questions, long-tail conversational phrases, and comparisons. By filtering our GSC data for these patterns, we can create a proxy report for “AI-Risk” traffic.

The “Question” Filter: AI Overviews are essentially answer engines. They thrive on questions. You can use the following Regex pattern in the “Query” filter of GSC to isolate all question-based searches:

^(who|what|where|when|why|how)[" "]

This pattern captures any query that starts with these interrogative words. Apply this filter and look at the trend lines. If you see impressions holding steady but CTR dropping significantly for this specific segment, you are seeing the impact of AI Overviews.

The “Conversational” Filter: Users interacting with AI often use natural language sentences rather than keyword fragments. They might type “tell me the best way to track seo” rather than just “seo tracking.” We can approximate this by filtering for long queries. This Regex captures queries with 7 or more words:

([^" "]*\s){7,}?

These long-tail queries are prime candidates for AI Mode engagement.

The “Comparison” Filter: Comparison queries are another high-risk category. Google’s AI excels at building comparison tables. Use this Regex to find them:

\b(vs|versus|compare|difference|better|best)\b

Once you have isolated these query segments, you need to perform a Delta Analysis.

These Zombie keywords are your immediate targets for GEO. You are already relevant; now you need to be cited. Review the content for these pages. Does it have a Direct Answer? Is it fresh? Does it have the right Schema? Re-optimizing these specific pages is the highest-ROI activity you can undertake.

To truly close the loop, you must combine GSC data with external reality. You cannot rely solely on Google’s reported metrics. Create a “monitoring” list of your top 50 business-critical keywords. Use a tool like SerpApi or manually check these SERPs in incognito mode. Record whether an AI Overview appears and whether your brand is cited. Then, correlate this manual data with your GSC export.

Compare the CTR of these three groups. You will almost certainly find that Group A outperforms Group B significantly. This data is the “smoking gun” you need to prove the value of GEO to your stakeholders. It moves the conversation from “traffic is down” to “we need to win more citations.”

The transition to AI-first search is a one-way street. We are not going back to the ten blue links. The “Answer Engine” is here, and it demands a fundamental shift in how we approach digital marketing.

The data from 2025/2026 paints a clear picture. For informational queries, the traditional organic click is an endangered species. With click-through rates dropping below 1% for non-cited results in AI environments, the “wait and see” approach is a strategy for obsolescence. However, the data also illuminates the path to growth. The “Citation Advantage”—a 35% boost in traffic for cited sources—proves that organic search is not dying; it is consolidating.

The winners in this new environment will not be the sites with the most backlinks or the highest keyword density. They will be the sites that function as Sources of Truth. They will be the brands that have established undeniable Entity Authority, that structure their content for machine readability, and that provide the fresh, data-rich facts that LLMs crave.

The “Black Box” of Google Search Console is frustrating, but it is navigable. By combining technical precision with strategic content restructuring, you can turn the AI disruption into a competitive advantage. You have the data; now you must execute the strategy.

Get an Offer

Join Us So You Don't

Miss Out on Digital Marketing News!

Join the Digipeak Newsletter.

Related Posts

To properly optimize for AI, it is essential to first understand how AI processes information. …

If you're reading this, you've witnessed a fundamental change in how the world finds information. …

The fashion world is always moving fast. But today, the biggest runway isn't in a …

In a world where digital trends change in a blink, you need a partner who …